How AI is Reshaping DevOps: The Future of Software Delivery on GCP

The Evolution of DevOps: How AI is Changing Software Delivery

Artificial intelligence transforms how software is created, released, and maintained in the tech-driven world of enterprise DevOps today. Companies are in the process of enhancing the automation, efficiency, and reliability of their DevOps processes by leveraging the strong AI and ML tools native to the Google Cloud Platform (GCP). Such changes would require a rethinking of software delivery, but also some new technologies. While there are advantages to the traditional DevOps methods, at times, it lags behind the weight of increased complexity within new systems. DevOps, in turn, will change through AI provision of predictive insights, empowered automated decision-making, and increased optimization. This combination of DevOps and AI is popularly known as “AIOps,” and when put into practice with the rich cloud resources of GCP, the results are great.

Implementing AI-Powered DevOps on GCP

1. AI-Driven Monitoring and Observability

Systems management through the act and processing of information depends on timely and effective monitoring and observability. AI will make these processes much more effective. AI will help discover issues at an early stage, reduce false signals, and predict potential outages before they happen.

GCP Services in Action

- Cloud Monitoring & Logging: Metrics, logs, and traces from applications and infrastructure.

- BigQuery: Historical data for trend and pattern analysis.

- AI Platform: Develops custom machine learning models for anomaly detection and predictive analytics.

Workflow

- Metrics and logs are sent by applications to cloud monitoring.

- BigQuery data processing to find out trends and anomalies.

- Models are trained by the AI Platform for predicting potential failures and sending alerts through Cloud Pub/Sub to tools like Slack or email.

Benefits

- Faster release cycles and less manual intervention.

- Quality deployments, with an AI-based risk assessment.

2. Intelligent Automation in CI/CD Pipelines

DevOps can’t get by without Continuous Integration and Continuous Deployment (CI/CD) pipelines, and AI improves these pipelines in several ways. It automates repetitive tasks, accelerates testing, and can predict risks of deployment in a given situation. For instance, discovering potential bugs and vulnerabilities in the code to suggest code optimization, as well as generating test cases automatically.

GCP Services in Action

- Cloud Build: Google Cloud Platform’s fully managed CI/CD platform can be integrated with the following AI tools to check your code quality and enable auto-builds.

- Vertex AI: Provides resources to train your machine learning models to predict the surface that these tests cover and give an estimation of high-risk code changes.

- Cloud Deploy: Offers the ability to implement automated deployment strategies, like canary or blue-green, with provisioning based on risk using AI.

Workflow

- Developers commit code to Cloud Source Repositories, triggering a build in Cloud Build.

- Vertex AI identifies the code’s top critical changes. It then deploys to GKE or Cloud Run gradually, and

- The risks of deployment are also assessed intelligently by Cloud Deploy.

Benefits

- Faster release cycles and less manual intervention.

- Quality deployments, with an AI-based risk assessment.

3. Security and Compliance

AI can ensure security in multiple magnitudes, specifically in the identification of threats, enforcement of security rules, compliance checking, and vulnerability scanning automation.

GCP Services in Action

- The Security Command Center would identify vulnerabilities and misconfigurations.

- GCP reCAPTCHA Enterprise, responsible for protecting CI/CD pipelines from malicious bots.

- DLP API, would be responsible for masking sensitive data within logs and pipelines.

Use Case

- A more focused use case is to scan container images in the Artifact Registry for vulnerabilities.

- Use the Security Command Center to automatically revoke over permissive IAM permissions.

Benefits

- Enhanced security through AI-driven threat detection

- Policy enforcement can be automated to keep you compliant.

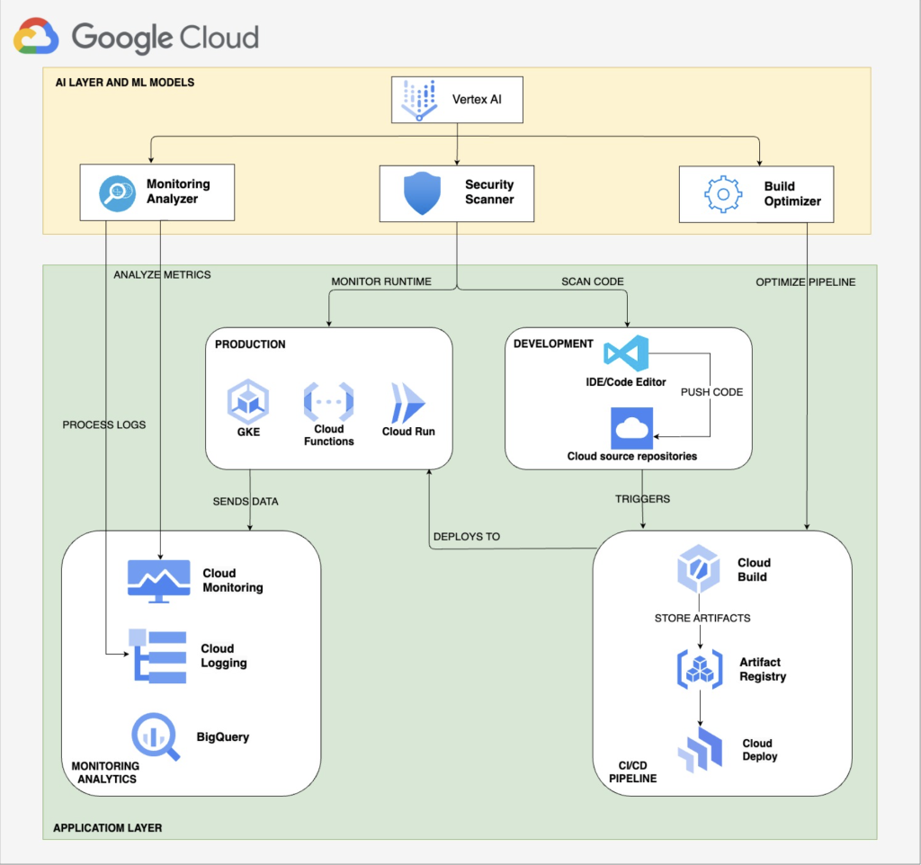

Architecture Diagram

GCP Architecture Diagram

Understanding the Flow of Architecture

1. Development Flow

- This journey starts inside the development environment, where the developers are writing code, using AI-enhanced IDEs such as Codeium and Co-pilot.

- Meanwhile, the actual coding process is accompanied by real-time code feedback through code intelligence AI, reflecting suggestions for improvement as well as adequate housekeeping and issue spotting.

- It is only after the code gets committed to cloud source repositories that:

- AI conducts comprehensive scans that go beyond mere syntax checking.

- AI also goes ahead to look at the codebase for security vulnerabilities, dependency analysis, as well as validating compliance requirements

- Builds the initial defense line against potential issues before it advances any further down the pipeline.

2. AI Operations Layer

- At the core of the architecture is the AI Operations Layer, functioning as the central nervous system of the system.

- The Vertex AI Platform processes data from all over the system continuously:

- Generates models on automated decisions and updates them.

- Automates learning from events to predict and help prevent future issues.

- Models are automatically trained, validated, and deployed based on new operational data.

- The prediction engine applies these models in real-time and makes automated decisions about resource allocation, security responses, and so forth.

- Prediction of Preemptible/Spot VMs termination based on Historical Data and Right Sizing of VMs.

3. CI/CD Pipeline

- After the code reaches the CI/CD pipeline, a series of complex operations take directed by AI:

- The Build process is operating over at Cloud Build with direction from the AI layer to maximize building the change.

- The system presents adaptive behavior towards the specific characteristics of each code change rather than responding statically.

- Change-based characteristics enable test intelligence to pick the most relevant tests, hence decreasing build time while preserving quality assurance.

- It collaborates with the AI layer for Artifact Registry and carries out intelligent scanning for finding vulnerabilities and checking whether artifacts fulfill security criteria.

- Other than just determining where to deploy the cloud, it gives AI-driven insights into optimal strategies for deployment considering system load, user patterns, and risks that can be involved.

4. Production Environment Orchestration

- GKE Clusters, Cloud Functions, and Cloud Run are where multiple deployment targets are supposed to be maintained by the architecture, in production.

- Anthos Service Mesh constitutes a smart network of services for intelligent communication and scaling based on real-time conditions, and yes, AI predictions too.

- The production environment happens to be an AI-driven automation.

- Predicting resource needs and potential issues before they backfire on users.

- Automated alteration of system configurations into best performance.

- Creates a self-tuning environment that becomes more efficient over time as AI learns from operational patterns.

- By self-tuning, we mean that it adapts and corrects itself with better performance as AI continues learning from the patterns observed in operation.

5. Monitoring and Analysis

- In continuous improvement, this system creates a closed-loop feedback mechanism:

- Data is aggregated from all Cloud Monitoring and Logging data sources.

- Operations Suite pours all streams in to correlate and analyze the data.

- Afterward, this layer of AI processes the information and finds patterns and anomalies invisible to human operators.

- The BigQuery Analytics gives deep insights into system behavior, or if that occurs, it allows AI to understand long-term patterns and make increasingly accurate predictions.

- This feedback is then fed back into the AI Operations Layer, which makes it a continuous cycle of learning and optimization. Eventually, this makes the whole system more intelligent over time.

6. Security and Compliance Integration

- Security is integrated throughout the architecture via the Security Command Center and Cloud Asset Inventory.

- The AI layer is continuously fed security telemetry data and, in return, identifies potential threats:

- Most of the time, it picks up subtle patterns that hint at security breaches.

- Helps to create a security posture based on proactive measures, preventing threats way before any impact on the system.

- The architecture maintains compliance through continuous monitoring and automatic enforcement of policies.

- Enables real-time validation of compliance.

- Makes automatic configuration adjustments to ensure security requirements are maintained

7. The Learning Cycle

- This setup ensures a boundless learning loop:

- Every operation, from code commit to production deployment, generates data that feeds back into AI systems.

- It leads to the development of a perpetually changing platform that keeps itself up-to-date and, even more importantly, secure.

- It learns from successes as much as from failures:

- Thus, it promotes a more precise recognition of what is most effective under different situations.

- Learning is integral to every dimension of the pipeline:

- All the way from development to operations to security, building a system that integrates into one.

Conclusion

Business software delivery has been redefined through the integration of AI technologies within the DevOps processes on GCP. Businesses can stand to benefit significantly from the high AI capabilities at GCP’s disposal if implemented in a structured manner, while enabling them to achieve enhanced productivity, dependability, and creativity. To reach the ultimate goal, the deployment should be made mindfully with continuous updates in knowledge and commitment to data-driven decisions. Firms that move with these reforms will have the capacity to satisfy the growing demands of modern means of software delivery as AI progresses further. Businesses can initiate their journey into AI-transformed DevOps through the framework proffered by the principles and practices expounded above. With these modifications, firms will be able to transform their software delivery capabilities while also managing their stakes and ensuring their success in the long run.