AWS EMR: Why Automated Hue Portal Setup & Hadoop Integration makes Data Analytics Easy

Introduction

In this data-driven era, handling and processing larger volumes of information quickly becomes the need of the hour. Most of us are familiar with AWS elastic map reduce (emr) for processing and analytics. One of the EMR highlights was its lessening of the pain of setting up analytics tools like Hue, which might be painful to install and configure by yourself. In this blog, we will discuss how AWS EMR makes this process easier and solves common data team challenges.

What is AWS EMR?

In this context, AWS EMR is a cloud-based service that provides easy use of big data frameworks like Apache Hadoop, Apache Spark, and Presto. It can process huge datasets quickly and cost-efficiently by leveraging the scalability and flexibility of the AWS cloud.

From the AWS Dashboard, Creating an AWS EMR Cluster step is simple. Here’s a detailed walkthrough to get you started:

Step1: Log into your AWS Management Console

- Navigate to the AWS Management Console.

- Log in with your AWS account credentials.

Step 2: Navigate to EMR

- Go to the AWS Management console, and type the keyword EMR in the search bar.

- Select Amazon EMR to access the EMR console.

Step 3: Create a Cluster

- Click on the Create cluster button.

Step 4: Setting Cluster ConfigurationsBasic Options

- Cluster Name: Input a name for your cluster.

- Release: Select the EMR release version. Most of the time it’s best to choose the most recent stable release.

Applications

In Applications: Pick the applications you wish to run. Common selections include:

- Hadoop

- Spark

- Hive

- Hue (if you want a web interface)

Hardware Configuration

Instance Type — Pick the instance types for the master and core nodes. Common choices are:

- Master: m5.xlarge (or similar).

- Core: m5.xlarge (or similar).

- Instance Count: Specify Core and Task instance counts For a simple setup, you can start with one master and two worker nodes.

Network

- Select a VPC — Virtual Private Cloud for the cluster Setting this up beforehand would be best.

- Subnet: Pick a subnet in your VPC where your cluster will run.

Step 5: The configuration of securities

- EC2 Key Pair: Use an existing EC2 key pair or create a new one. This key will be important when accessing your cluster over SSH.

- IAM Role: An IAM role, which is an AWS identity with permission policies that determine which resources the identity can access, must be selected or created for your cluster with the necessary permissions to communicate with other AWS services.

Step 6: Bootstrap Actions (Optional)

If you want to install any custom software or libraries when the cluster starts, you can specify bootstrap actions here.

Step 7: Review and Create

- Review all your settings. Make sure everything is set up properly.

- To start the create cluster process click on Create cluster.

- With this in mind, go to the next step.

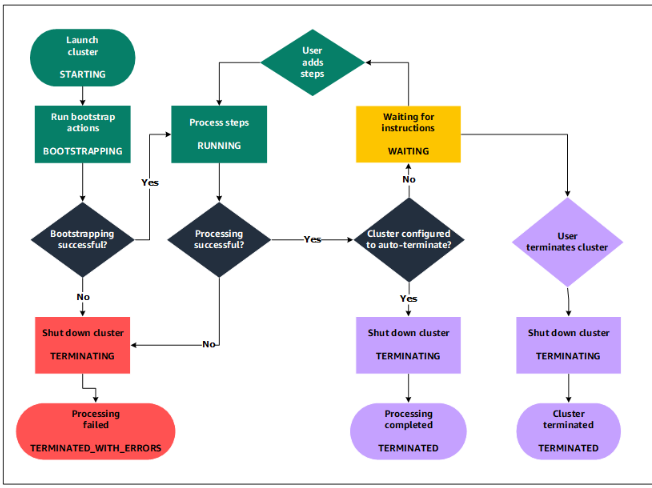

step 8: Monitor cluster creation

- After you create the cluster a few minutes be taken for provisioning the cluster.

- You can view the status of your cluster in the EMR console. Wait for the cluster to move from “Starting” to “Running.”

Step 9: Access Your Cluster

After the cluster has started, you can access it via SSH using the EC2 key pair that you selected.

Connecting to the master node:

ssh -i your-key. ‘pem hadoop@master-node-public-dns

Step 10: Use Hue (Optional)

- If you installed Hue-The Hue web interface can now be reached by going to the master node’s public DNS address:

- WORKAROUND: PROPOSED SOLUTION (Accessing the master node via Public DNS) http://master-node-public-dns:8888

- Start querying via Hue, and you can also handle your data.

Step 11: Terminate the Cluster

- Once done, don’t forget to terminate your cluster to avoid any charges for cluster running:

- Go back to the EMR console.

- Choose your cluster and press Terminate.

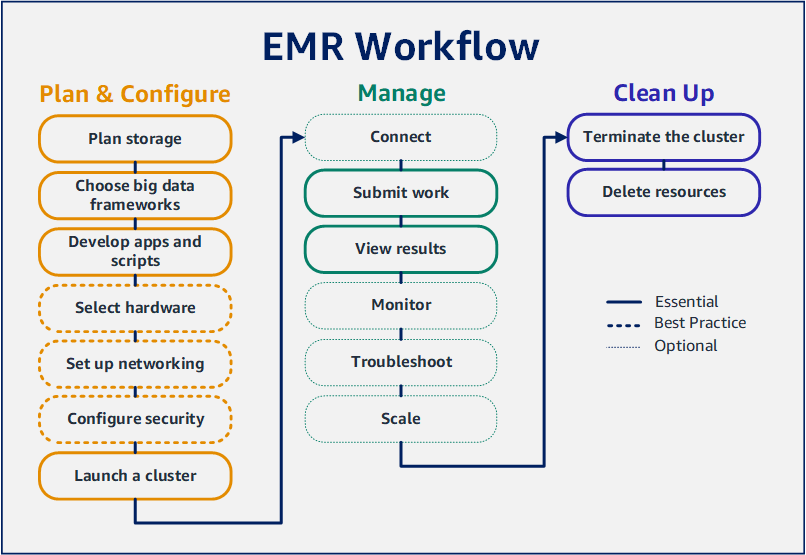

EMR WORKFLOW

Understanding Hadoop

Hadoop is an open-source framework developed by Apache for processing large data sets over a distributed computing cluster with a simple programming model. It consists of:

- Hadoop Distributed File System (HDFS): It is a distributed file storing system that stores data on multiple machines with high throughput access to application data

- YARN (Yet Another Resource Negotiator): Hadoop’s resource management layer is responsible for scheduling tasks and managing cluster resources.

- MapReduce: A programming model for processing and generating large data sets with a parallel, distributed algorithm.

Hadoop is built to scale from a single server to thousands of machines, making it an incredibly robust tool for data processing.

The Challenge of Manual Setup

Configuring a data processing environment usually requires several steps:

- Cluster Configuration: The process of specifying instance types, sizes, and configurations can be tedious.

- Installation of Tools Manual installation and configuration of tools such as Hue, which offers a web-based interface for data processing.

- Integration: It can be difficult to ensure that various components (e.g., Hadoop, Hive, Spark) work together smoothly.

Comparison of Manual Hadoop Setup vs. AWS EMR

| Feature | Manual Setup | AWS EMR |

| Time | Significant time investment for installation, configuration, and maintenance. | Relatively quick and easy setup process. |

| Complexity | Requires deep technical expertise in Hadoop components, networking, and security. | Manages many of the complexities, providing a simplified user experience. |

| Cost | High upfront costs for hardware, software, and ongoing maintenance. | Pay-as-you-go pricing model with potential cost savings, especially for smaller or intermittent workloads. |

| Scalability | Can be challenging to scale clusters manually, especially for dynamic workloads. | Offers easy scalability with the ability to add or remove nodes as needed. |

| Maintenance | Requires constant monitoring, patching, and updates for security and performance. | Handles most maintenance tasks, reducing the burden on users. |

| Expertise | Requires specialized knowledge in Hadoop administration. | Can be used by users with less Hadoop expertise. |

How AWS EMR Makes the Process Easier

Automated Hue Portal Setup

AWS EMR’s Hue is one of its best features. You can conveniently enable Hue during the cluster setup when you create a new EMR cluster. This automated deployment means there’s no need to install it manually and configure it to run, so data scientists or analysts can focus on doing the things that matter most — getting insights out of data.

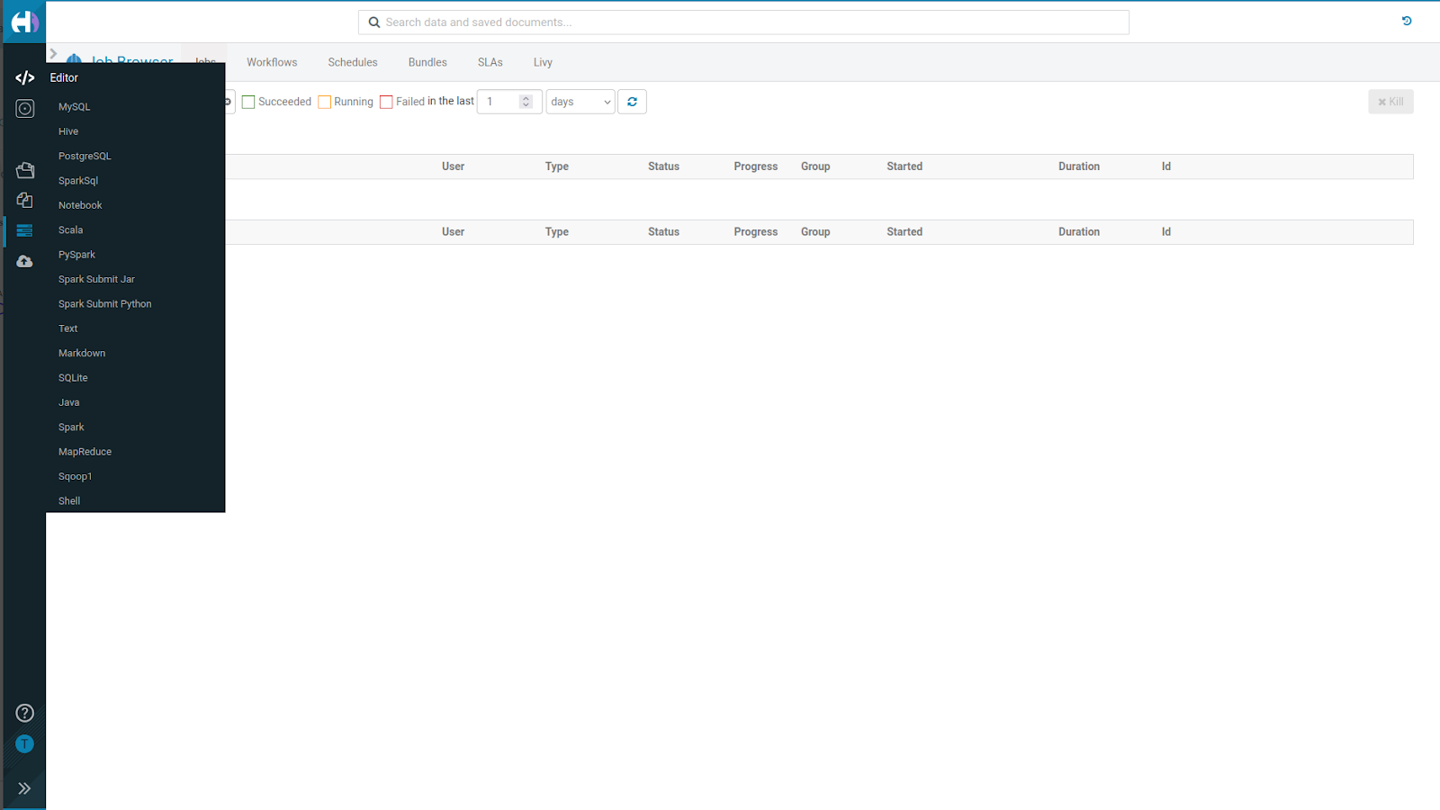

HUE Interface

These complications can result in delays, misconfigurations, and cost overruns.

With AWS EMR, scaling your cluster becomes easy, either up or down. You increase or decrease resources as per workload need with minimal downtime. This flexibility means you can process bulk data during busy times and wind down when demand subsides, optimizing costs.

Cost-Effective Solutions

AWS EMR charges you only for your usage. You can spin up clusters for short-term projects without having to invest in long-term infrastructure. The on-demand pricing model allows organizations to experiment with data analytics without incurring heavy costs.

Easy Monitoring and Management

AWS offers monitoring tools such as Amazon CloudWatch, which can monitor the performance and health of your EMR clusters. This allows you to easily pinpoint and fix errors, keeping the pipeline for processing your data running smoothly.

AWS EMR Data Processing Workflow Flowchart

Solving Common Problems with EMR

- Complexity: Manual configurations can create complexities that are not easy to troubleshoot. This complexity is reduced by EMR’s automated processes.

- Time Consumption: Setting up data processing manually may be time-consuming. EMR’s simple configuration accelerates time to value.

- Cost Optimization: EMR scales on-demand, avoiding resource wastage and optimizing costs.

- Learning Curve: The simplicity of tools like Hue allows for less technical people to access and understand data, which ultimately leads to greater collaboration across teams.

Conclusion

AWS EMR simplifies how organizations manage big data by reducing the need to set up and manage analytics tools. EMR offers automated installation processes, seamless integration, and cost-effective solutions that enable teams to concentrate on analyzing data, not managing infrastructure. AWS EMR empowers organizations to realize better value from their data, enabling driving insights and innovations with minimal friction.