Prompt Engineering for DevOps: Enhancing Automation and Efficiency

Introduction

Artificial intelligence has recently significantly altered various sectors, and DevOps is among those affected. The increasing utilization of AI tools like ChatGPT has made prompt engineering a vital competency for professionals in the DevOps field.

Prompt Engineering

This blog will explore the significance of prompt engineering and its relevance to DevOps. It will provide insights into how AI can be used to enhance infrastructure management, continuous integration, delivery pipelines, incident response, and beyond.

What is Prompt Engineering?

Prompt engineering refers to developing, designing, and optimizing prompts to enhance the output of Foundational Models for your needs. The prompt gives little guidance and leaves a lot to the model’s interpretation. The structure of the prompt frequently influences the quality of AI-generated responses. In the context of DevOps, prompt engineering can provide the automation of numerous tasks, help in troubleshooting efforts, generate automation scripts, and deliver immediate documentation, all achieved through carefully crafted input prompts.

Why Should DevOps Engineers Care About Prompt Engineering?

In the realm of DevOps, time is invaluable. Through the application of prompt engineering, one can:

- On-Demand Code Generation: Automate the creation of documentation and code. Instantly generate scripts (consider tools such as Terraform, Ansible, Python, or Jenkinsfiles).

- Faster Troubleshooting: Quickly compose alerts or responses to incidents.

- Automation of Repetitive Tasks: Minimize repetitive work by offloading them to AI.

- Enhanced Decision-Making: AI can recommend scaling infrastructure, improving deployment strategies, or optimizing resource usage based on detailed prompts.

Prompt engineering is a powerful technique for enhancing the efficiency of your DevOps processes.

Key Prompt Engineering Techniques for DevOps

This section examines techniques where prompt engineering can be incorporated into DevOps workflows to yield significant advantages.

| Technique | What It Does Do? | Example Prompt |

| Zero-Shot Prompting | Model answers without any prior examples. | “Write a Terraform code to create an EC2 Instance.” |

| Few-Shot Prompting | Provide a few examples to help the model understand the task. | “Here are two alerts: <examples>. Now write an alert for CPU over 90% usage.” |

| Chain of Thought Prompting | Guide the model through a multi-step task. | “First, explain Jenkins pipelines. Next, generate a Docker-based pipeline. Then show how to deploy it to AWS.” |

| System Prompts | Set the model’s behavior (e.g., instruct it to behave like a DevOps bot). | “Act as a DevOps Engineer. Generate Ansible playbooks for Apache server setup.” |

Prompt Engineering Techniques in Action (with Examples)

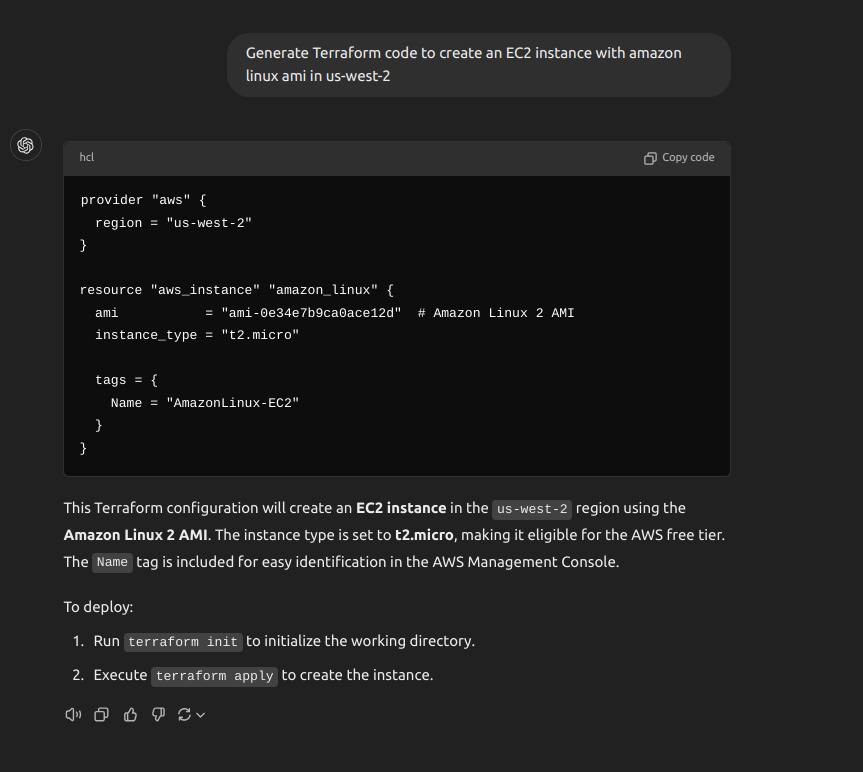

- Zero-Shot Prompting (Quick Terraform Configuration): No need to search Google! Just ask the AI to generate a Terraform configuration.

Zero-shot Prompt Engineering

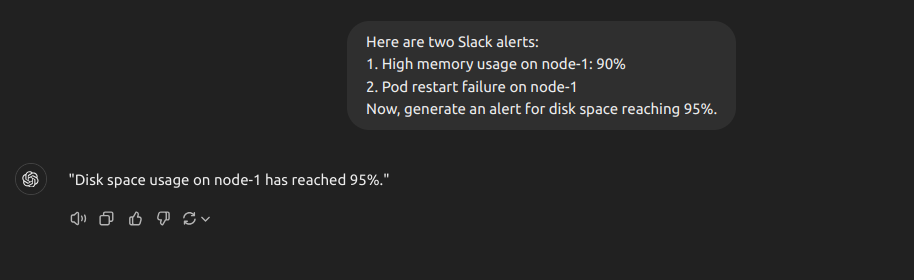

Zero-shot Prompt Engineering - Few-Shot Prompting: Incident Alert

few-shot Prompt Engineering

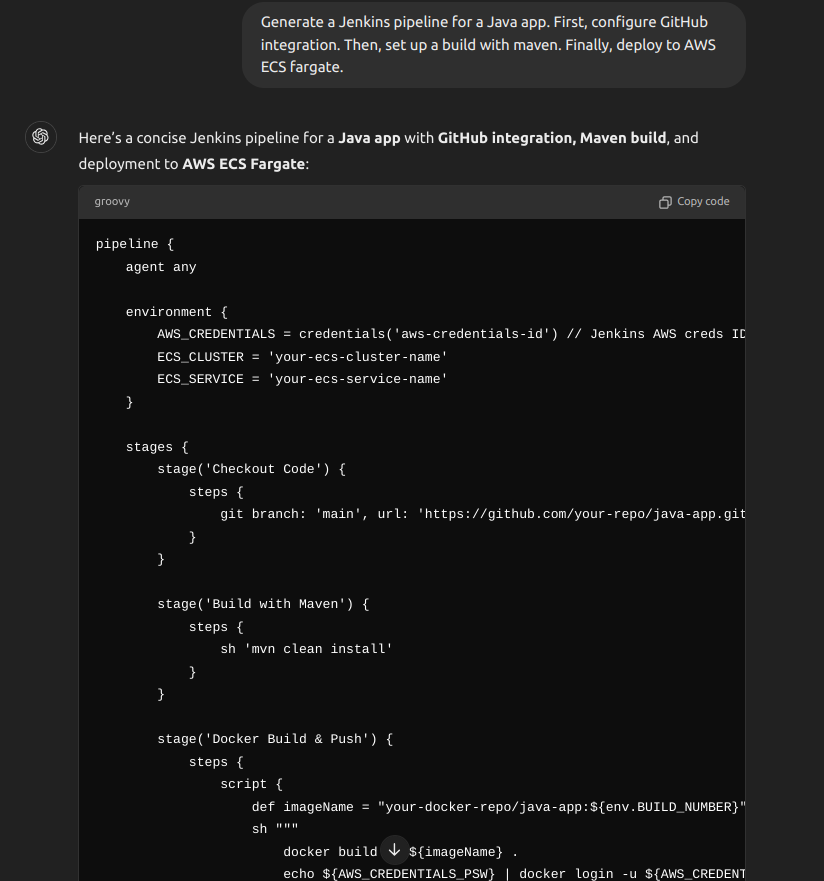

- Chain of Thought Prompting: CI/CD Setup Example.

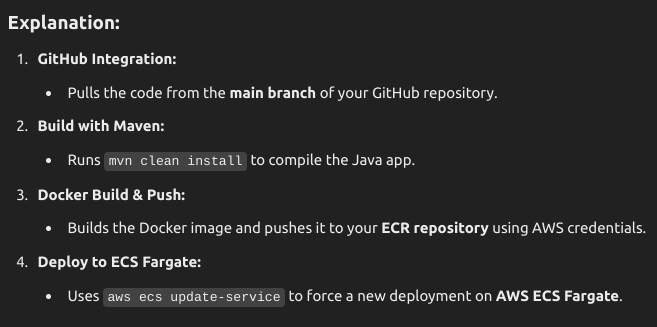

Chain of Thought Prompting

Chain of Thought Prompting

Best Practices for Prompt Engineering in DevOps

- Be Precise: Random prompts may lead to incomplete or wrong responses. For instance, indicate the specific type of infrastructure, cloud service, or programming language required.

- Provide Context: Start Giving context to your prompts, such as the environment (e.g., AWS, Azure, GCP), version details, or particular configurations, to enable the AI to customize its response effectively.

- Iterate and Improve: Achieving the ideal answer may not occur on the initial attempt. Experiment with various prompt formats and adjust them based on the feedback received to enhance the quality of the output.

- Utilize Code Examples: If the AI has access to the context from an existing codebase or configuration file, it can generate more precise responses. Include relevant code snippets in the prompt to direct the AI’s focus.

- Treat AI as a Collaborator, Not a Substitute: While AI-generated recommendations can be beneficial, it is essential to review and verify the output before implementing it in a production environment. Prompt engineering should serve to enhance your expertise rather than replace it.

AI as a Collaborator

Future of DevOps with Prompt Engineering

The integration of AI-powered prompt engineering with DevOps tools is just beginning. The future may look like this:

- Automated Releases & Deployments: Deployments that are automated through natural language directives.

- Systems that possess self-healing capabilities, identifying and rectifying problems independently.

- Intelligent chatbots functioning as DevOps aides, managing daily routine responsibilities.

chatbots

- Proactive Monitoring: AI-enhanced systems can foresee and address incidents before their effect on users, thereby minimizing downtime.

- Natural Language Queries: Teams can inquire about infrastructure performance or deployment statuses using straightforward text commands.

NLP

- AI-Assisted Learning: DevOps teams gain contextual insights and educational resources tailored to their specific environment and challenges.

Conclusion: Make Prompt Engineering Your New DevOps Skill

The field of prompt engineering represents a significant advancement for DevOps engineers. It is not only engaging and practical but also has the potential to reduce hours spent on manual tasks. By mastering the skill of crafting precise prompts, you can enhance your efficiency and explore new opportunities, whether it involves generating Terraform configurations or automating incident notifications.

At TO THE NEW, we assist teams in managing their AI-driven projects effortlessly. Our team of AWS AI-certified DevOps engineers guarantees the smooth integration of AI with DevOps methodologies, leading to more intelligent automation and expedited deployment processes.

So, give it a try! What will your next prompt be?

We invite you to express your opinions: What is the most impressive DevOps task you have successfully automated using AI? Consider creating your upcoming Jenkinsfile or Terraform configuration by utilizing a prompt and witnessing the remarkable results!