Building Resilient Cloud Architectures Using GCP Load Balancing

Introduction

In today’s digital world, maintaining application availability and performance is crucial. As user demand grows, effective traffic management prevents downtime and ensures a smooth experience. Load balancers are key in distributing traffic across servers, and keeping applications responsive. Google Cloud Platform (GCP) offers various load-balancing solutions tailored for internal, global, or specific traffic needs. This blog explores GCP’s load balancing options, offering guidance on when to use each and how to set them up to ensure your applications run reliably.

Load Balancing in GCP

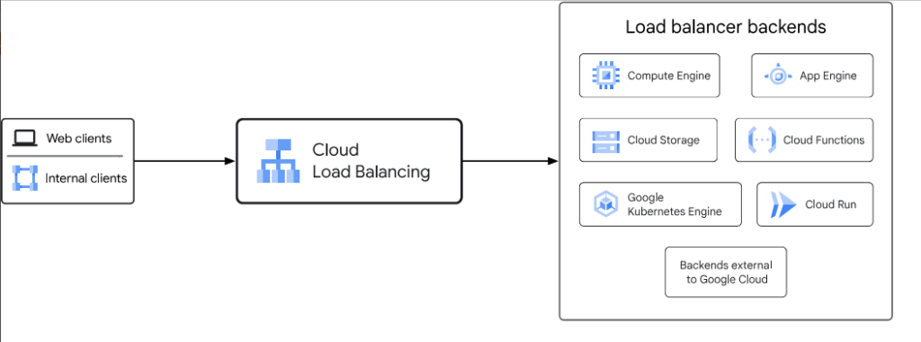

Load balancers are essential for distributing network traffic across multiple servers, ensuring high availability, reliability, and scalability of applications. Different kinds of load balancers designed for specific use cases are available in Google Cloud Platform (GCP).

Load Balancing In GCP

The following load balancer features are provided by cloud load balancing:-

- Single anycast IP address :- It uses a single anycast IP address to manage traffic across multiple regions.

- Layer 4 and Layer 7 Support :- Handles both Layer 4 (TCP, UDP, etc.) and Layer 7 (HTTP/HTTPS) load balancing. This allows you to route traffic based on network protocols or application-level data like HTTP headers and URLs.

- External and Internal load balancing:- It can manage both internet-facing traffic using external load balancing and internal traffic using internal load balancing.

- Global and Regional support :- Distribute traffic across multiple or single regions to enhance performance and ensure high availability. This approach terminates connections close to users, reducing latency.

- Cross-region :- It also supports cross-region load balancing with automatic failover, ensuring continuous service if primary backends fail. It quickly adapts to changes in traffic, user demand, and backend health.

Types of Load Balancers in GCP : –

Google Cloud provides two types of load balancers: –

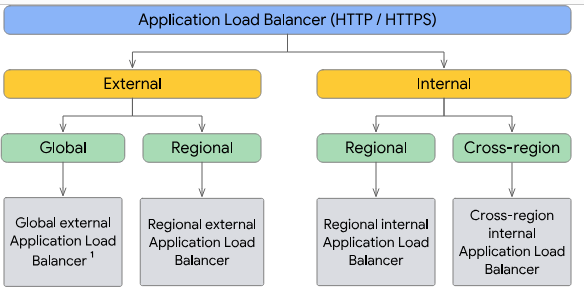

1. Application Load Balancers

2. Network Load Balancers.

Application Load Balancer :

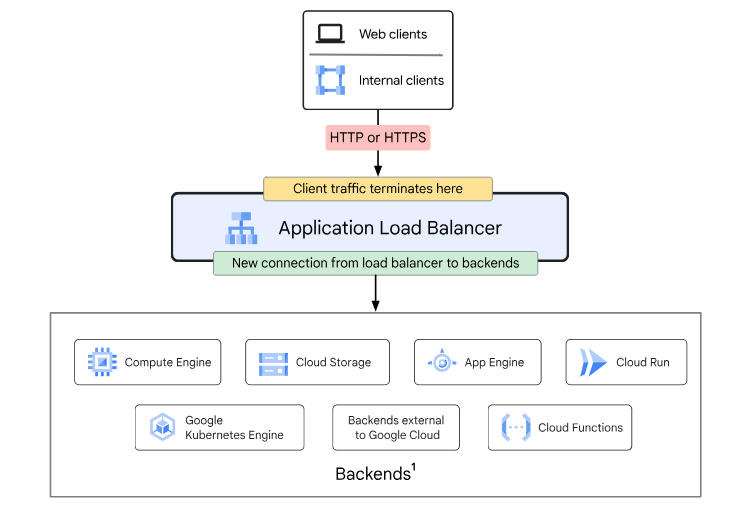

Application Load Balancers are Layer 7 load balancers that act as proxies to manage and scale your services behind a single IP address. It distributes HTTP and HTTPS traffic to backends hosted on Google Cloud platforms like Compute Engine and Google Kubernetes Engine, as well as to external backends outside of Google Cloud.

Application Load Balancers can be deployed in two ways:

- External Application Load Balancers : –

For apps that need internet access, you can deploy it as an external load balancer. These load balancers can be deployed in different modes: global, regional, or classic.

Global :- Global Load Balancers distribute traffic to backends across multiple regions.

Regional :- It distributes traffic to backends within a single region.

Classic : – Classic Application Load Balancers are global when using the Premium Tier, but in the Standard Tier, they can be set up to work regionally. - Internal Application Load Balancers :-

a. This load balancer handles internal traffic at the application level (Layer 7) and works like a proxy.

b. Google Cloud utilizes Envoy proxy on the backend for load balancing and traffic management particularly the HTTP(S) Load Balancer, as well as Google Cloud Endpoints.

c. It uses an internal IP address, so only devices within your VPC network or those connected to it can access the load balancer.

d. These load balancers can be deployed in the following modes: regional and cross-region.

Regional : – Internal Application Load Balancers support backends only in a single region.

Cross- region : – Internal Application Load Balancers can support backends across multiple regions and are accessible globally.

Network Load Balancer :

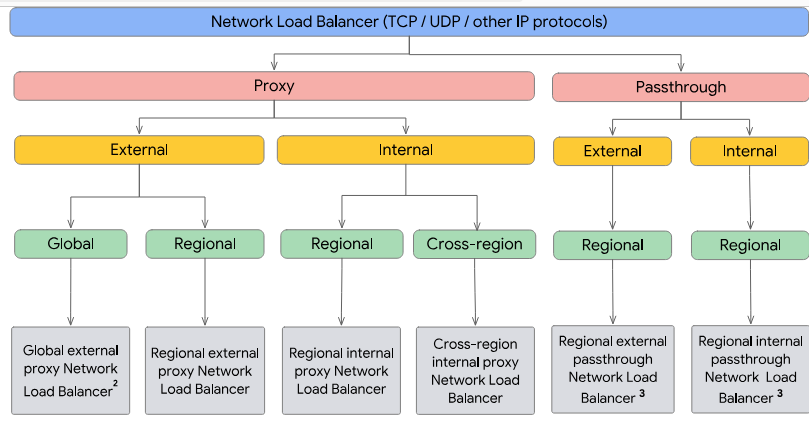

- Network Load Balancers are Layer 4 load balancers that handle TCP, UDP, or other IP protocol traffic.

- These load balancers are available as proxy load balancers or passthrough load balancers.

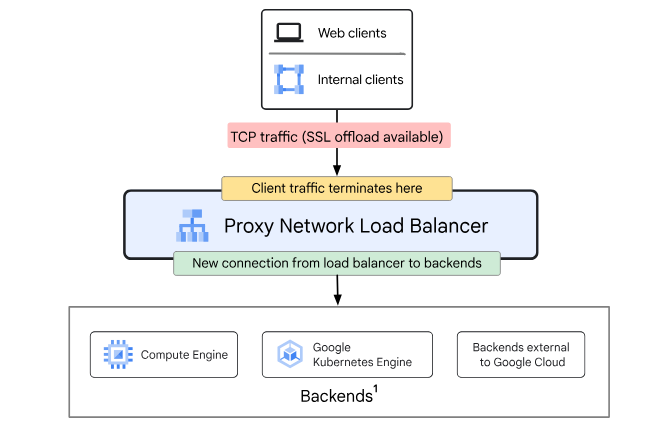

1. Proxy Network Load Balancer : –

- Proxy Network Load Balancers operate at Layer 4 which works as a reverse proxy that distributes TCP traffic to backend servers of vpc or other cloud.

- It is specifically built to manage TCP traffic, with or without SSL. They can be deployed in the following modes:-

External proxy Network Load Balancer : –

1. It distributes traffic from the internet to VM instances in a Google Cloud VPC.

2. Acts as a reverse proxy, managing TCP traffic from the internet.

3. Supports using a single IP address for all users worldwide.

4. Can be deployed in global, regional, or classic modes.

Internal proxy Network Load Balancer : –

1. The Internal Proxy Network Load Balancer is a Layer 4 load balancer that allows you to manage and scale TCP service traffic behind a regional internal IP address. This IP address is only accessible to clients within the same VPC network or those connected to it.

2. It supports regional and cross-regional deployments:

Regional : Ensures that both clients and backend services are located within a specified region.

Cross-regional : Enables load balancing across backend services distributed globally.

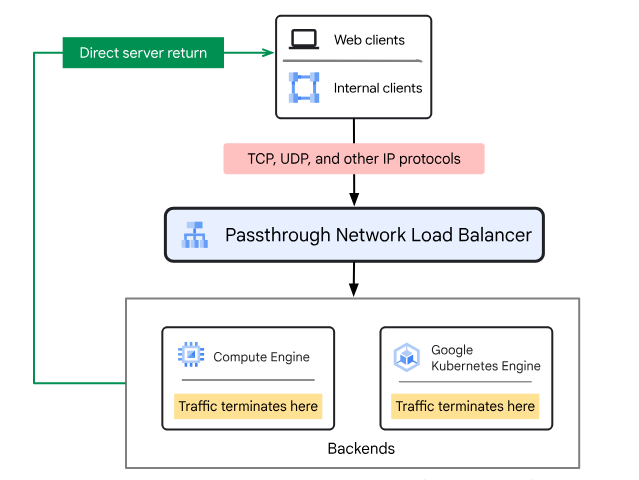

2. Passthrough load balancers : –

- Passthrough Network Load Balancers are Layer 4 regional load balancers that distribute traffic among backends in the same region as the load balancer.

- These load balancers are not proxies. Packets reach backend VMs with original source and destination IPs, protocols, and ports unchanged.

- Backend VMs handle connections directly and send responses straight to clients without returning through the load balancer.This process is known as direct server return (DSR).

- It can be deployed in two modes:-

External passthrough Network Load Balancers :-

- External passthrough Network Load Balancers handle traffic from internet clients and Google Cloud VMs with external IPs, including those using Cloud NAT.

- It works with backends in the same region and project but across different VPCs.

- Unlike proxies, they don’t terminate connections but forward packets with original IPs, protocols, and ports. Backend VMs manage connections and respond directly to clients.

- It can be Backend service-based load balancers and Target pool-based load balancers.

-

- Backend service-based load balancers help to control backend traffic distribution. It supports IPv4 and IPv6, handles various protocols (TCUDP,ESP,GRE, ICMP, ICMPv6), and works with both managed and unmanaged instance groups, as well as zonal network endpoint groups (NEGs).

- Target pool-based load balancers serve as the backend for the load balancer, defining which instances receive the traffic. Each forwarding rule acts as the front end for the load balancer. It supports only IPv4 traffic and only supports the TCP and UDP protocols.

-

- An external passthrough Network Load Balancer has a single target pool, which can be referenced by multiple forwarding rules.

Internal passthrough Network Load Balancers :-

- It distributes traffic among internal virtual machine (VM) instances within the same region of the Virtual Private Cloud (VPC) network.

- It helps us to run and scale our services behind an internal IP address that’s only accessible to systems within the same VPC network or those connected to it.

- Internal passthrough Network Load Balancers can also be accessed through VPC Network Peering, Cloud VPN, and Cloud Interconnect.

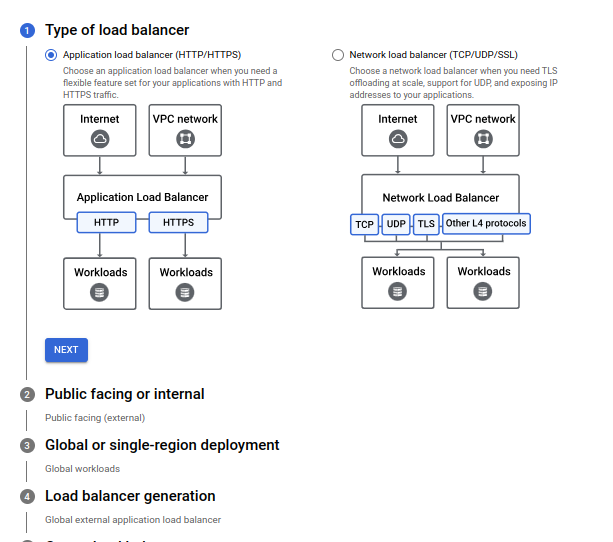

Step-by-Step Guide to setting up a load balancer in GCP : –

Step 1:- Choose the Right Load Balancer

Determine the appropriate load balancer based on your application’s requirements:

● For global reach :- Use the external application load balancers for HTTP(s) and external proxy network load balancers for TCP.

● For regional traffic :- Use Internal Application Load Balancers for HTTP(s) , Internal proxy network load balancers and passthrough load balancers for TCP/UDP.

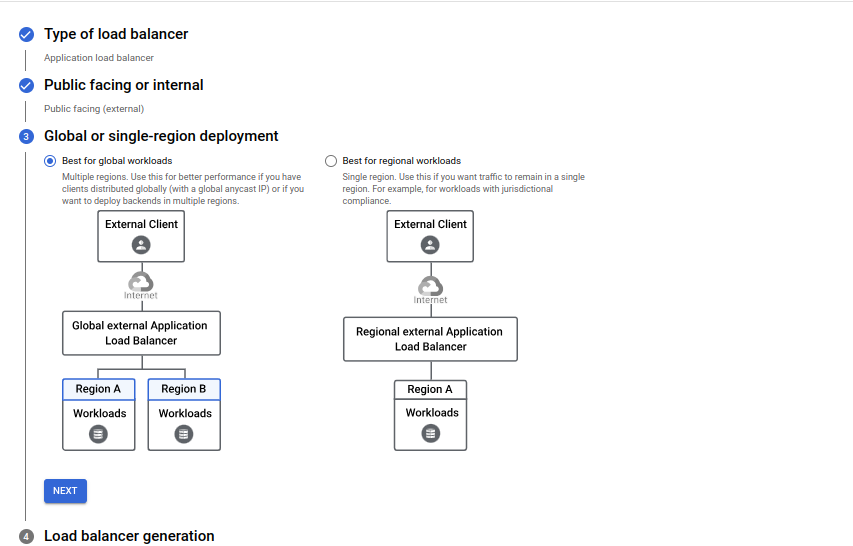

- Either choose a global or regional load balancer:-

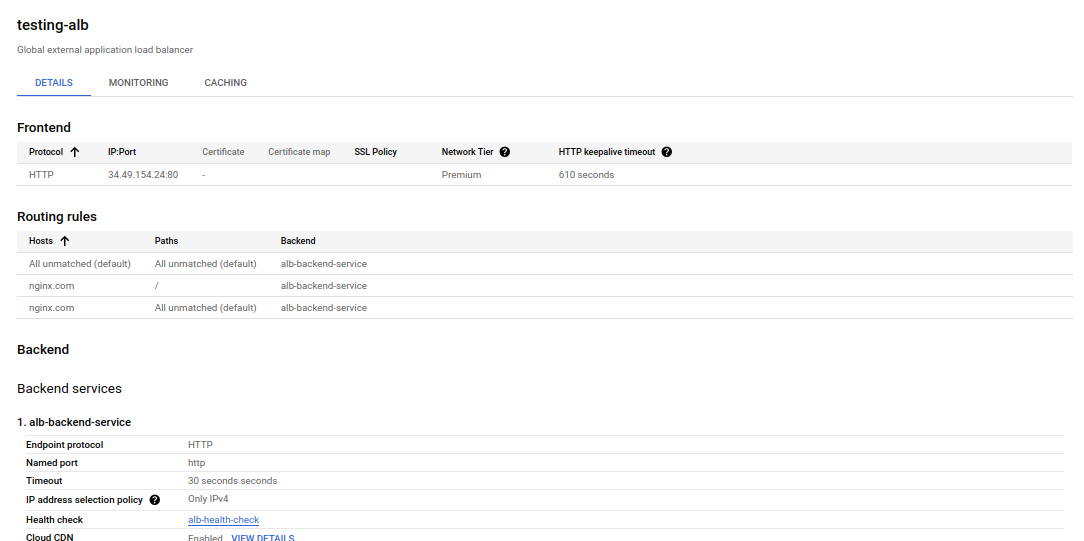

Step 2 :- Configure the Load Balancer

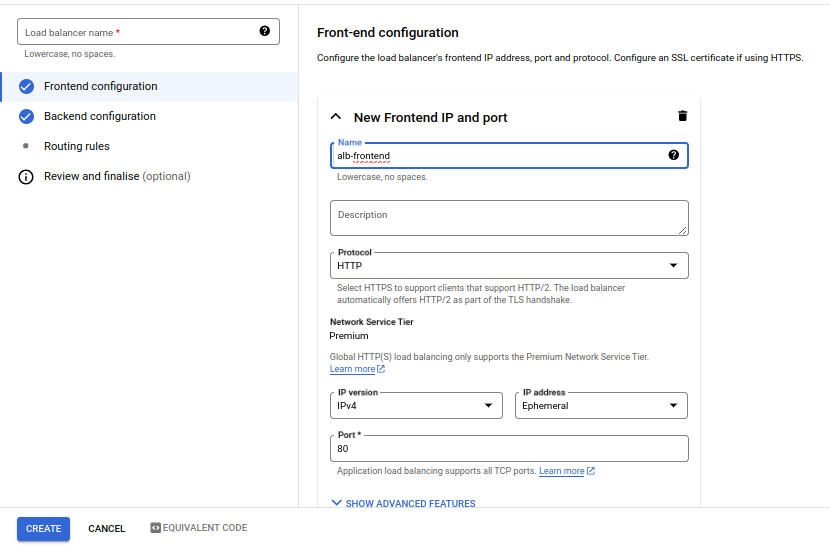

a. For global workload :

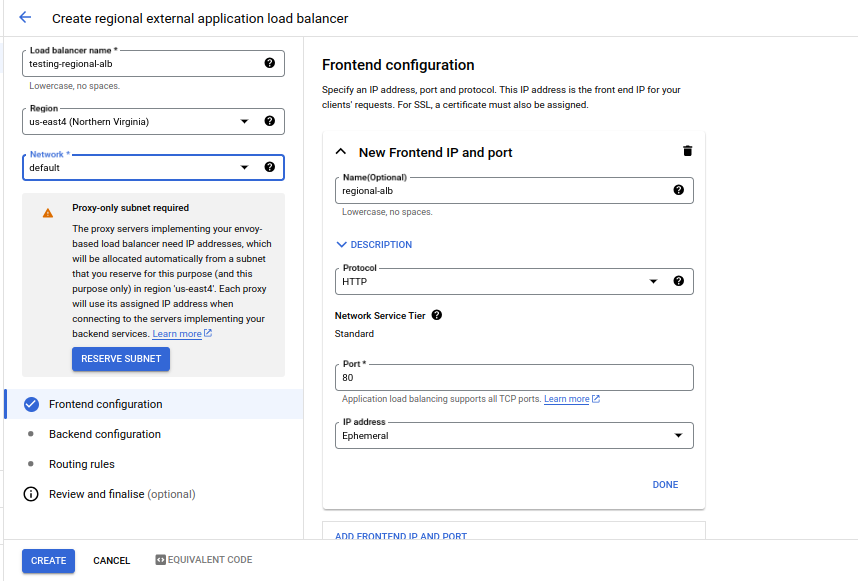

b. For regional workload : –

1. Choose your region.

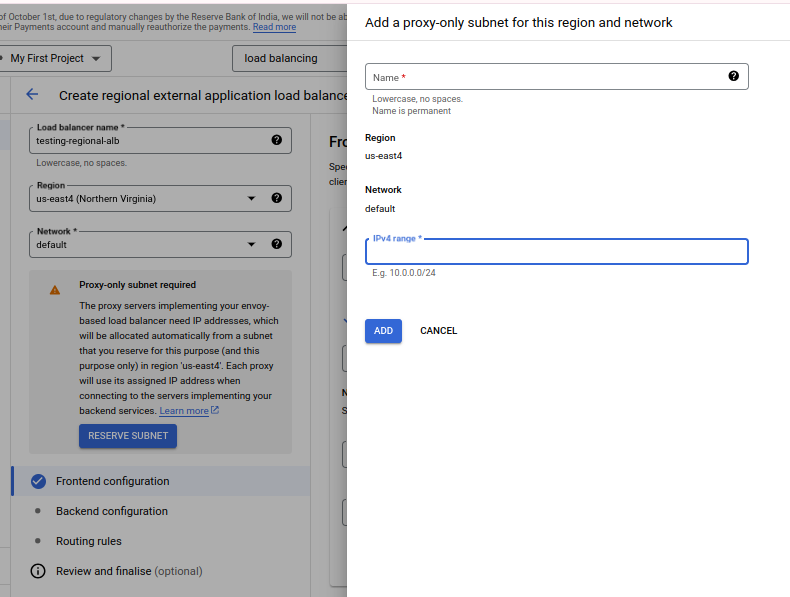

2. Create a proxy subnet for ALB proxy pass.

Proxy subnet is a specific subnet within your Virtual Private Cloud (VPC) designed to manage traffic passing through a load balancer, such as an

application load balancer (ALB), and direct it to backend services or applications.

c. Set Up the Frontend : – Define the IP address and port for your load balancer. For global load balancers, choose an external IP address; for internal load balancers, use an internal IP address.

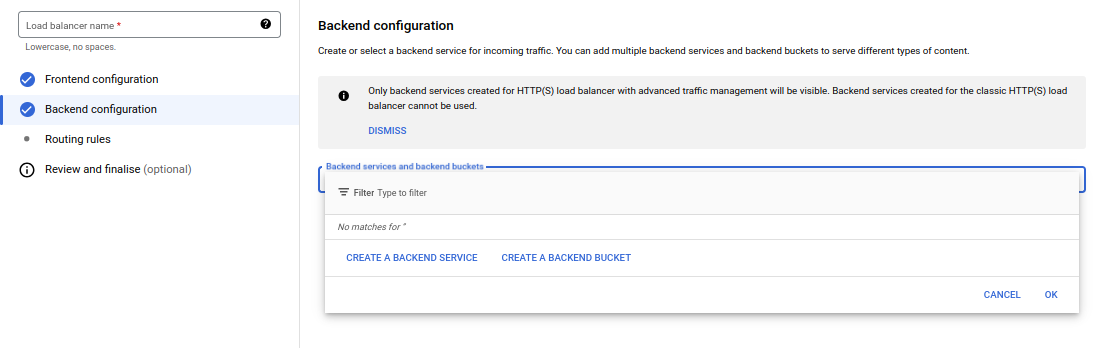

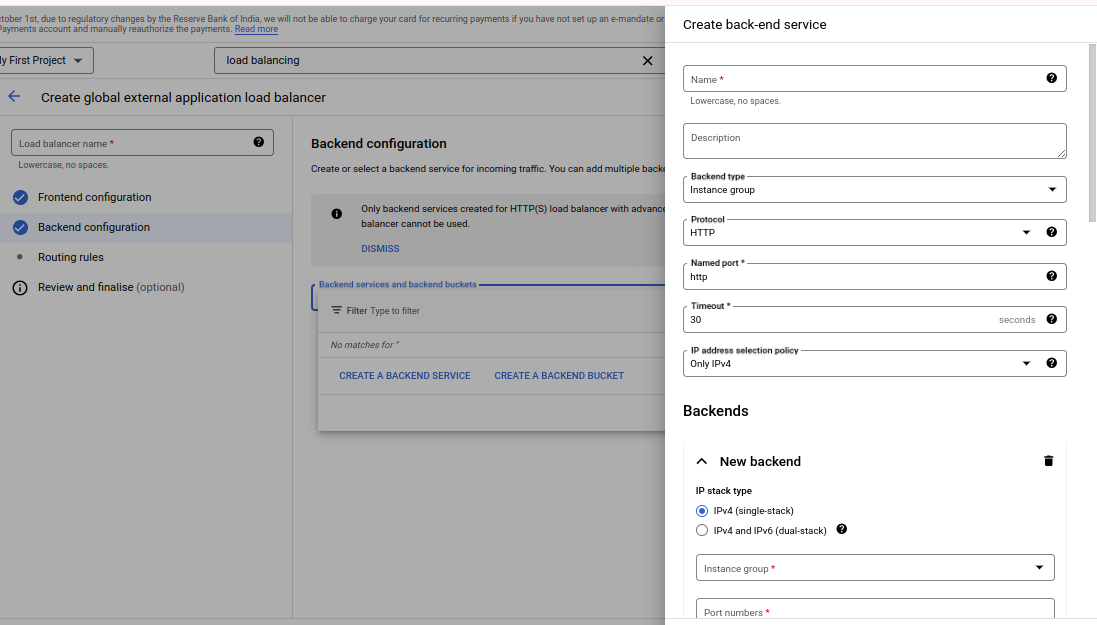

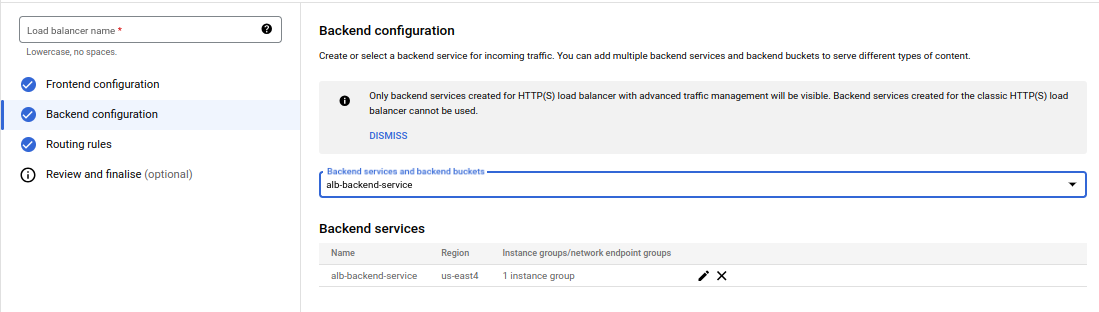

Step 3 : Create a Backend Service

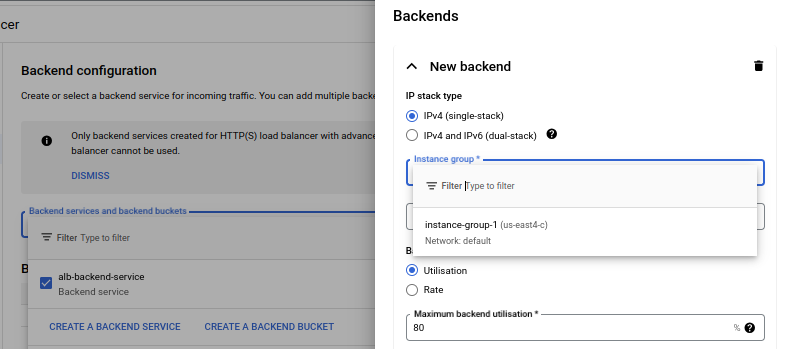

1. Create a Backend :- Define the backend service that connects your load balancer to the backend instances or endpoints.

2. Specify the instance group or network endpoint group (NEG) that will serve the traffic.

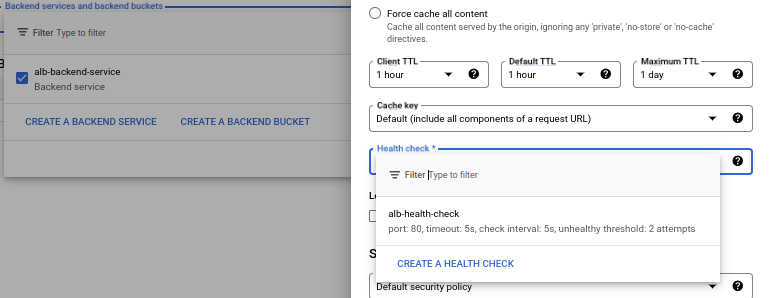

3. Health Check Configuration : – Configure health checks to ensure that the backend instances are healthy and capable of serving traffic.

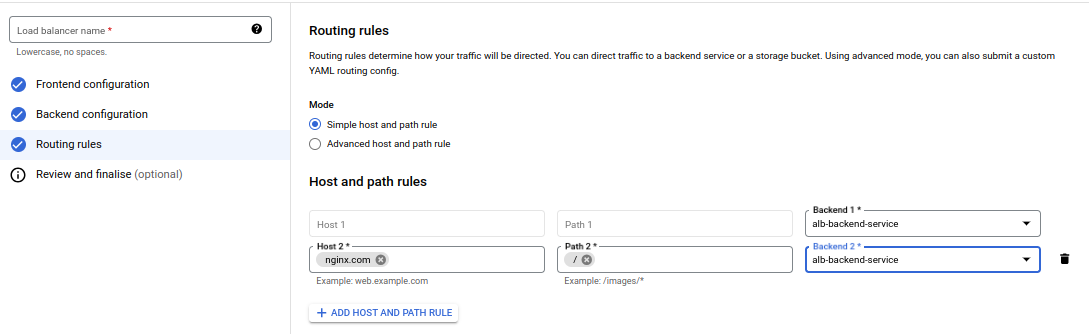

Step 4 : Routing Rules

Set up routing rules based on the type of load balancer. For HTTP(S) load balancers, configure URL maps to route traffic based on URL patterns.

Step 5 : Testing and Monitoring

Deploy and Test :- Deploy your load balancer and test it to ensure it’s correctly routing traffic to the backend instances.

Conclusion

GCP’s load balancers offer high availability, security, and performance for managing application traffic. By understanding their types and use cases, you can select the right solution to keep your applications resilient and scalable.