Building Efficient Data ETL Pipelines: Key Best Practices [Part-2]

In the first part of ETL data pipelines, we explored the importance of ETL processes, and their core components, and discussed the different types of ETL pipelines. Now, in this second part, we will dive deeper into some of the key challenges faced when implementing data ETL pipelines, outline best practices to optimize these processes and discuss the ETL data pipelines for dim and fact tables.

Challenges in setting up an ETL Pipeline

While ETL pipelines manage diverse data efficiently, building and maintaining them can be challenging. Key factors like data format, volume, and speed must be considered. The critical components of ETL are essential, but if not properly analyzed, they can become significant challenges.

Some of the challenges are listed below.

-

-

Data Related Challenges

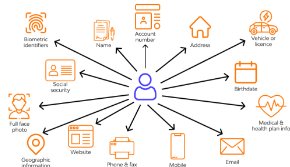

- PII Data – Since there can be multiple data sources, each can contain different information. Some of which can be Personal Identifiable Information (PII). Having granular control over the data can help in identifying and eliminating any such information.

PII Data

- Variety of Data – When dealing with different data formats (Structured, semi-structured, and unstructured) one must be able to use specific tools and techniques to efficiently handle them.

- Dirty Data – Every time data is received there is a high chance that the data might be dirty and needs to be cleaned before processing. Data might contain inaccurate, missing, or outdated values which need to be taken care of as it can affect the business and its reporting.

Dirty Data

- PII Data – Since there can be multiple data sources, each can contain different information. Some of which can be Personal Identifiable Information (PII). Having granular control over the data can help in identifying and eliminating any such information.

-

Data Integration Challenges

- Varied Data Sources: One of the initial level problems faced in setting up the pipelines is that nowadays, etl is not just limited to one data source and needs to be fetched from multiple sources and of varied data formats(structured, semi-structured, unstructured). Identifying these sources and using the correct tools and techniques is vital.

- Schema Mismatches: As the data sources have increased it is easy to face schema mismatch issues when integrating them. Either some of the fields can be missing or a particular column might be of a different data type resulting in conflicts.

-

Scalability Challenges

- Data Volume and Velocity – As the data increases data pipelines must be able to adapt and process the data to ensure smooth functioning.

- Performance and Cost Overhead – Data pipelines might output desired results at the initial stages but can cause performance overhead and increased costs if not implemented correctly. These 2 aspects need to be taken care of while creating a data pipeline.

- Parallel Processing – Parallel processing is one of the widely used terms in the data world but when not implemented correctly can lead to data inaccuracy. Choosing between auto-scaling pipelines and self-managed clusters can incur different monetary costs as well as different implementation practices.

-

ETL Best practices and guidelines for data warehousing

1. Limit the amount of incoming data to be processed.

-

-

- Only the data that is new or has some modification should be filtered for the pipeline.

- The pipeline should focus on optimizing the data intake to minimize data volume.

- This will reduce the resources and costs required for the pipeline.

- The ELT will become faster as it has to do less computations and transformations.

- Easier to debug and maintain data quality and accuracy.

- Recovery is easier as only a subset of the data will be disrupted.

-

2. The dimension tables should be processed before the fact tables.

-

-

- The dimension table (attribute/entity information) should be loaded before the fact table (transactional data).

- The fact table has columns that reference the dimension table. So, to maintain data accuracy, this rule should be followed. It will ensure the necessary reference data is available for the fact table.

- Disregarding this practice can lead to incorrect or incomplete data in the warehouse. It can increase non-existent values in the fact tables.

- This also ensures that the transaction data is correctly referencing the up-to-date version of the dimension information.

-

3. Opportunities for parallel processing.

-

-

-

- This refers to processing a group of independent tasks in parallel instead of one after another to make the pipeline faster.

- It makes better use of resources by distributing the workload.

- This also reduces the scenario of a bottleneck if the tasks are not dependent on each other.

- This also increases the fault tolerance as failure of one task will not stop other independent data from loading.

-

-

4. Maintaining a data catalog

-

-

- A data catalog is a central repository to store all the information regarding the data in the system. It includes data owners, metadata, attribute information, and other things.

- It helps in better understanding and organization of the data system.

- It helps to smoothly transition a new user into the system.

- It leaves no space for confusion or misunderstanding among different users.

-

5. Limiting data for the end users

-

-

- Certain data, such as personal or confidential information, may not be suitable for sharing with all end users. To control access, separate destination tables should be created for different user groups.

- Access to each final destination should be strictly controlled and highly specific.

- The final storage location, whether it is cloud storage or a data warehouse, should only hold data relevant to the specific use case.

-

Dimension table ETL

The following are the best practices while processing the dimension tables:

Step 1: Data preparation

-

-

- Change Data Capture(CDC): maintain a transaction data timestamp to govern if the data has changed or not.

- Database logs: extract the logs that represent changes in the tables and prepare the data for processing using these logs. This is a bit complex as this requires understanding and parsing the change info from the logs.

- Database scan and compare: bring a large subset and then compare it with the current database to detect changed records and then update the DWH from it.

-

Step 2: Data transformation

-

-

- Data unification: The data is captured from multiple sources which have different structures. It needs to be modeled into a single structure for better analysis and reporting. This is important to maintain consistency in the data.

- Data type and size unification: This is to ensure data is in alignment with the downstream system to avoid any pipeline failure or reporting issues.

- Deduplication: Removing duplicates from the data to reduce redundancy.

- Vertical slicing: Need to drop the unnecessary columns which are not required for the use-case. This also eases out the pipeline as it saves time and resources.

- Horizontal slicing: Need to filter the data based on the specific use cases of the data mart/table. Populating unnecessary data which do not meet the criteria is of no use.

- Correcting known errors: ETL should address and fix the data quality issues that are known to the team.

-

Step 3: Process new dimension rows

-

-

- The new rows should be appended first to the tables before changing the existing ones.

-

Step 4: Process SCD type 1 changes

-

-

- The existing data will be overwritten with new data. It is an in-place update.

- No maintenance of history. The table will have the latest record only.

- Note: If we have a type 2 change occur before the type 1 change, then more than one row will be needed to update when type 1 changes happen. This can be done with the help of a natural/unique key which will not change for the entity ever.

-

Step 5: Process SCD type 2 changes

-

-

- This rule creates a new row every time a change takes place for that entity. So, it is an append with a surrogate key.

- The history will be maintained by creating a new record for each change while keeping the old record active until a specific end date.

-

Fact tables ETL

The following are the best practices at each step while processing the fact tables of the warehouse:

-

- Process all the dimensions table: The dimensions table should be already updated before the fact to maintain the references and accuracy of data and avoid inconsistency.

- The fact tables work on append mode. The new rows are inserted into the tables with correct referencing with the dim tables.

- There is a case that needs special handling while appending the data in fact tables. Below is the scenario explained:

- There is a dim table of SCD 2 type. It has 3 records for a particular entity. While inserting a new row in fact tables for this entity, we need to be sure of which version from the dim table it belongs to. This can be done by introducing either an ‘active flag’ column or a ‘start date’ and ‘end date’ column in the dimension table. Once, we can correctly map the fact row with the correct version of dim, we can accurately load data into the fact table.

Conclusion

As we’ve seen, ETL data pipelines come with their own set of challenges, but by following best practices, these hurdles can be navigated. A well-structured ETL pipeline is crucial for ensuring accurate, timely, and reliable data flow. By addressing both the complexities and solutions, we hope this series equips you with the insights needed to build efficient ETL systems.