Running Docker Inside Docker Container using Custom Docker Image

Introduction and Scenario

There are various use cases for running Docker inside a host Docker container, which we will mention later on, but one of the use cases that often comes in handy is when we run a Docker container as a Jenkins agent. Suppose we want to build and push our application image to any Docker registry, say ECR, and then want to deploy the image in any EKS, ECS, etc cluster via Jenkins or any other CI/CD tool like Buildkite or GoCD, etc, then we can use this approach i.e., we can run Docker as an agent and then inside that Docker agent we can run Docker commands to build and push the images.

Prerequisites

- Docker should be pre-installed on the host machine

Use Cases

To run a Docker container inside another Docker container.

Some of the use cases for this are:

- One potential use case for docker in docker is for the CI pipeline, where we need to build and push docker images to a container registry after a successful code build.

- Building Docker images with a VM is straightforward. But, when we use Docker containers as Jenkins agents for our CI/CD pipelines, docker in docker is a must-have functionality.

- For Sandboxed environments:

- Testing Environments: In CI/CD pipelines, developers often need to run tests in an environment that closely mimics the production setup. By running Docker containers within another Docker container, we can create a sandboxed environment where we can quickly spin up test environments, run tests, and tear them down without affecting the host system.

- Isolation: Docker containers provide a level of isolation, but running them inside another Docker container adds an extra layer of isolation. This can be useful for running untrusted or potentially malicious code in a controlled environment without risking the host system.

- This is for experimental purposes on our local development workstation.

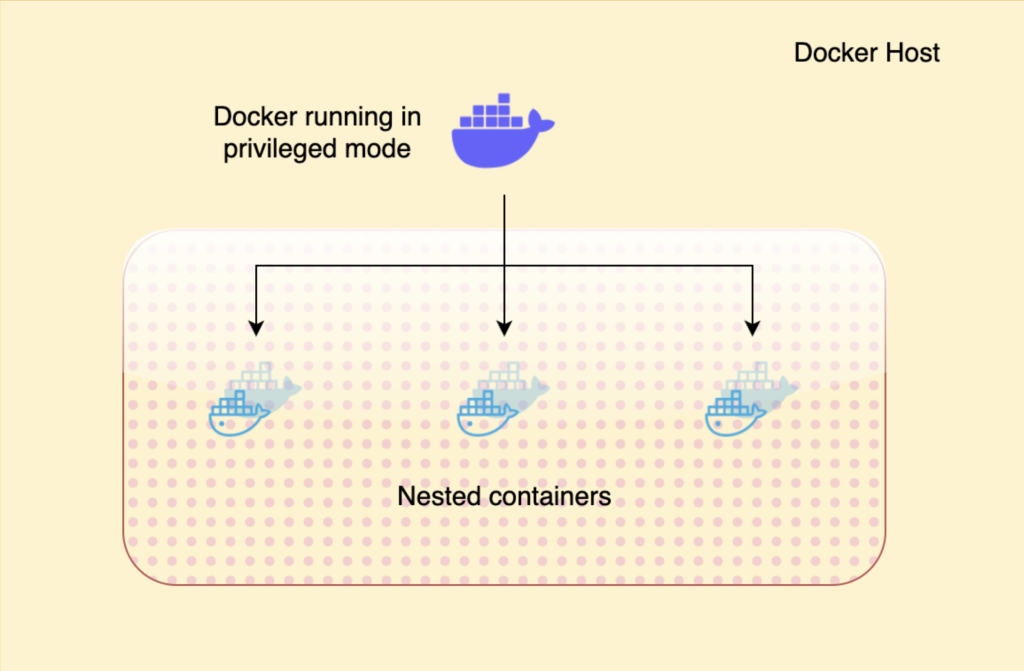

How doest this work?

One of the features of Docker is the new “privileged” mode for containers. It allows us to run some containers with almost all the capabilities of their host machine regarding kernel features and host device access. Also, we can now run Docker within Docker itself. Here’s how:

Solution

- First, we need a Dockerfile (with all the necessary configurations that supports running Docker inside Docker)

FROM ubuntu:latest # Updating the Container and Installing Dependencies RUN apt update && apt install apt-transport-https ca-certificates curl lxc iptables -y # Installing Docker RUN curl -sSL https://get.docker.com/ | sh # Installing Wrapper Script ADD ./script.sh /usr/local/bin/script.sh RUN chmod +x /usr/local/bin/script.sh # Define Volume for Container (Secondary Container) VOLUME /var/lib/docker CMD ["script.sh"]

- The above Dockerfile is doing the following:

- It installs a few packages like lxc and iptables (because Docker needs them), and ca-certificates (because when communicating with the Docker index and registry, Docker needs to validate their SSL certificates).

- Also /var/lib/docker should be a volume because the filesystem of a container is an AUFS mount point composed of multiple branches, and those branches have to be “normal” filesystems (i.e., not AUFS mount points). We can say that /var/lib/docker is a place where Docker stores its containers, and it can not be an AUFS filesystem.

- Here is the wrapper script that we are running while creating the above Docker image:

#!/bin/bash

# Ensure that all nodes in /dev/mapper correspond to mapped devices

dmsetup mknodes

# Defining CGROUP for our child containers

CGROUP=/sys/fs/cgroup

: {LOG:=stdio}

[ -d $CGROUP ] || mkdir $CGROUP

# Mounting CGROOUP

mountpoint -q $CGROUP || mount -n -t tmpfs -o uid=0,gid=0,mode=0755 cgroup $CGROUP || {

echo "Can not make a tmpfs mount"

exit 1

}

# Checking if CGROUP is mounted successfully or not

if [ -d /sys/kernel/security ] && ! mountpoint -q /sys/kernel/security

then

mount -t securityfs none /sys/kernel/security || {

echo "Could not mount /sys/kernel/security."

}

fi

# Removing the docker.pid file

rm -rf /var/run/docker.pid

# If custom port is mentioned for our child containers

if [ "$PORT" ]

then

exec dockerd -H 0.0.0.0:$PORT -H unix:///var/run/docker.sock \

$DOCKER_DAEMON_ARGS

else

if [ "$LOG" == "file" ]

then

dockerd $DOCKER_DAEMON_ARGS &>/var/log/docker.log &

else

dockerd $DOCKER_DAEMON_ARGS &

fi

(( timeout = 60 + SECONDS ))

[[ $1 ]] && exec "$@"

exec bash --login

fi

- The above script does the following:

- It ensures that the cgroup pseudo-filesystems are properly mounted because Docker needs them.

- It checks if you specified a PORT environment variable through the -e PORT command-line option. The Docker daemon starts in the foreground and listens to API requests on the specified TCP port if provided. It will start Docker in the background and give you an interactive shell if not specified.

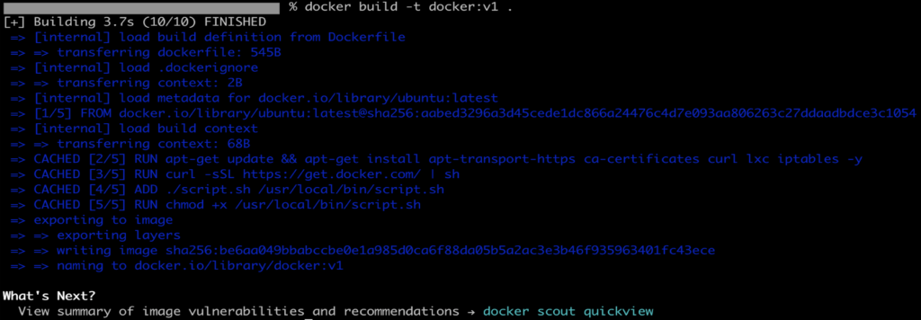

- Now, just build the Docker image:

docker build -t docker:v1

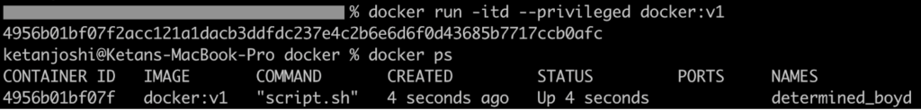

- Now, run the container with privileged mode:

docker run -itd --privileged docker:v1

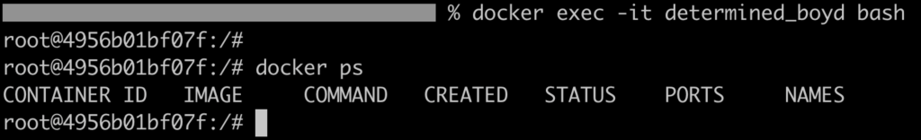

- Now, you can access the docker inside the above container. First, go inside the above container, then run the docker commands:

docker exec -it <container_name> bash

Conclusion

Running Docker containers inside another Docker container offers significant benefits, especially in scenarios where Docker containers serve as Jenkins agents for building and deploying images.

Usefulness for Jenkins Agents:

- Build Isolation: Docker-in-Docker allows Jenkins to create isolated build environments for each job or pipeline, ensuring that dependencies or changes in one build do not affect others.

- Environment Consistency: By encapsulating build environments within Docker containers, Jenkins ensures consistent build environments across different machines and eliminates “works on my machine” issues.

- Scalability: Jenkins can dynamically spin up multiple Docker containers to handle concurrent builds or stages, enabling scalability and efficient resource utilization.

- Resource Efficiency: Docker containers offer lightweight virtualization, allowing Jenkins to run multiple build agents on a single host machine without significant overhead.

Utilizing Docker-in-Docker for Jenkins agents enhances build isolation, environment consistency, scalability, and resource efficiency. While it offers significant advantages, carefully considering performance, security, and management complexities is necessary to ensure smooth operation and mitigate potential risks. With proper configuration and monitoring, Docker-in-Docker enables Jenkins to efficiently build and deploy Docker images in a controlled and reproducible manner.