Container Orchestration – Fundamentals and Best Practices

Introduction

More and more companies globally are catching up with the DevOps trend. Companies are breaking down the siloed structure and automating delivery pipeline to reduce the release cycles and eliminate redundant tasks. While DevOps is gaining popularity, containers are not far in the race. Container technology is bringing about a transformation in the packaging of these applications. Implemented together, they define true agility.

New York-based 451 Research estimates that the application container market will grow from $762m in 2016 to $2.7bn by 2020 according to their Cloud-Enabling Technologies Market Monitor report.

Container technology has emerged as a reliable means to quickly package, deploy & run application workloads without the need for moving hardware or operating systems physically. They allow us to standardize the environment, enable a self-sufficient runtime environment and abstract away the specifics of the underlying operating system and hardware. They securely compartmentalize applications and enable running them side-by-side on the same machine, for efficient resource utilization.

When Docker introduced the concept of containers, developers were developing products leveraging virtual machines (VMs) which were difficult to manage, hard to customize, needed huge disk space and turned out as an expensive solution. Virtual Machines also required manual efforts in installing them and taking up snapshots to product virtual disk images. They were also less flexible to evolving business needs.

Docker brought in an efficient & neat way to create those images through the docker script language which automated the tedious task of creating snapshots manually. Docker images require less maintenance and provide greater security. It comes as an aid to several kinds of IT projects which require developers to rely on a single test server, built through scrum scripts on a virtual machine or where the customer application is to be deployed on the developer laptop despite different computing environments. Docker images can be shared between developers to have access to a debug environment and simplified software deployments whether on a private cloud or regular virtual machines.

Container Orchestration

Container Orchestration

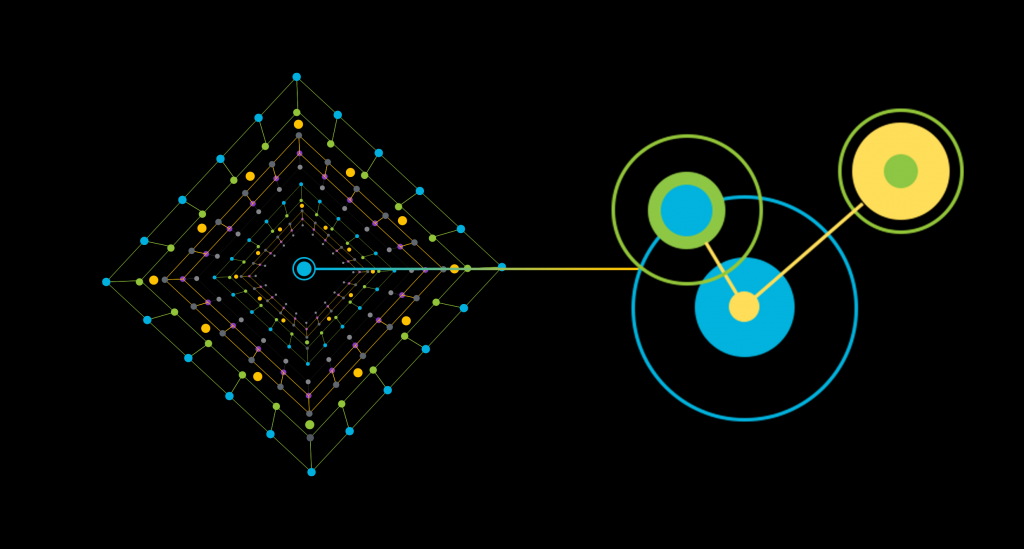

With Docker, emerged the world of shared operating systems, simplification of software deployments & the ability to run applications reliably across different computing environments. Its simplicity and rich ecosystem make it extremely powerful and easy to use. However, to be of use, containers need to communicate with other containers as well as with the outside world. Docker containers can be configured to expose parts to directories on the host as well as linked to communicate without exposing all resources to other systems. While deploying containers, in reality, developers usually need some degree of failover, load balancing, & most importantly, services clustering. For this, the deployment of multiple containers to implement an application can be optimized through automation. As the number of containers & hosts increase, this becomes increasingly significant and valuable. This type of automation is referred to as orchestration. It is often used to manage more than one container via orchestration tools.

Orchestration tools primarily manage the creation, upgradation & availability of multiple containers. They control the connectivity between containers and allow users to treat the entire cluster of containers as a single deployment target & build sophisticated applications.

The key functions of orchestration tools enable a developer to automate all aspects of application management, including:

- Initial placement

- Scheduling

- Deployment

- Steady-state activities such as update

- Health monitoring functions that support scaling and failover

A Closer Look at Three Popular Orchestration Platforms

Deploying on medium to large scale platforms requires resource scheduling, which is possible only when an orchestration tool is being leveraged. The most popular container orchestration tools across the industry are:

Docker Swarm is Docker’s own container orchestration tool. The objective of Docker Swarm is to use the same Docker API that works with the core Docker Engine. Its key approach is that instead of targeting an API endpoint representing a single Docker Engine, it transparently deals with an endpoint associated with a set of Docker Engines. The primary advantage to this approach is that the existing tools and APIs will continue to work with a cluster in the same way they work with a single instance. Swarm also prevents provisioning of containers on faulty hosts via basic health monitoring.

Developers create their applications using Docker’s tooling/CLI and Compose & they don’t have to be re-coded to accommodate an orchestrator.

-

Kubernetes by Google

Google also has its own container orchestration tool called Kubernetes. It works on the policy of master & pods. The master is the control layer, which runs an API service that manages the whole orchestrator. Though a single master can control the entire setup, production environments usually have multiple masters. Kubernetes primary features include:

- Automated deployment and replication of containers

- Online scaling of container clusters

- Managed exposure of network ports to systems outside the cluster

- Load balancing of containers

- Upgrades of application containers

It also supports health checks at different levels.

-

Apache Mesos/Marathon

Apache Mesos dates to pre-Docker era & it is primarily a platform that manages computer clusters using Linux Cgroups in order to provide CPU, I/O, file-system, and memory isolated resources. It works on a distributed systems kernel, or in more simple terms, a cluster platform which provides computing resources to frameworks. Marathon is one such framework which specializes in running applications, including containers, on Mesos clusters.

These simple tools with rich functionalities & powerful APIs make containers and their orchestration a favorite among DevOps team. They also integrate these tools into the CI/CD workflows.

Best practices for Container Orchestration for IT production

Digitally evolving companies practicing DevOps as a service are constantly eager to leverage containers in optimizing their IT infrastructure via container orchestration. Let’s look at some of the best practices that IT teams and managers should be considering as they move container-based applications into production.

1. Draw-up a Clear Path from Development to Production

The first step to ensuring a smooth move to production using container orchestration is drawing the path from development to production and having a staging platform in place. The containers require testing, validation & need to be prepared for staging. The staging platform, created with or within an orchestration system should be a replica of the actual production configuration & is usually at the end of a DevOps process. Once the containers are stable, they can be moved to production. Further, in case of issues with the deployment, they should be able to rollback at any time. This is an automatic mechanism in some systems.

2. Monitoring & Automated Issue Reporting

Technology team needs to understand what is going on within the container orchestration system. There are several monitoring & management tools available to monitor containers, whether in the cloud or on premises. These monitoring systems enable technology teams to:

- Monitor system health by gathering data over time such as utilization of processor, memory, network etc and use it to analyze and determine relationships that indicate success or failure.

- Take automatic actions based on findings, which prevent outages. Policies are set up within the monitoring software that allows doing this via established rules.

- Perform continuous reporting of issues and react to issues with fixes that are continuously tested, integrated, and deployed so that the issues can be resolved in a short amount of time.

3. Set-up Automatic Data Backup and Disaster Recovery

While public clouds usually have an inbuilt disaster recovery mechanism, there could be accidental removal or corruption of data & the failover capabilities may not be seamless. The development team needs to store data either within the container where the application is running or in an external database that may be container-based. The data, wherever it is stored, must be replicated to secondary and independent storage systems and protected in some way. Users should also be able to perform some backup & recovery mechanisms & security controls need to be set-up for appropriate access as per the customer’s policies. For this, data recovery operations need to be defined, set-up, tested well and workable.

4. Capacity Planning for Production

Capacity planning for production is an essential best practice for both on-premises and public cloud-based systems. The development team needs to follow the following guidelines while planning for production capacity:

- Understand the current, near future & long-term capacity requirements in terms of the infrastructure needed by orchestration systems. This includes servers, storage, network, databases, etc.

- Understand the interrelationship between the containers, container orchestration, and other supporting systems (e.g., databases) and their impact on capacity.

- Model the capacity of the servers in terms of storage, networking, security etc. by configuring these servers virtually within a public cloud provider, or physically using the traditional method.

- Near-future, mid-future & long-term growth plans need to consider with the capacity should be modeled around the forecasted resources required for this growth.

Conclusion

While container orchestration is still in its infancy, containerized architectures are quickly becoming a part of the DevOps CI/CD workflows. The benefits of container orchestration stretch way beyond just ensuring business continuity and accelerating the time to market. With rich container orchestration tools enabling interaction between containers through well-defined interfaces, the modular container model serves as the backbone or the ideal deployment vehicle for microservice architectures. The Microservice architectures enable organizations to architect their solutions around a set of decoupled services & are commonly known as a uniquely aligned architecture to help achieve success.

If you have some queries on container orchestration or need consultancy on DevOps, feel free to get in touch with one of our experts.